Yarn-based algorithm for generating realistic cloth renderings

Brief Description

Researchers at UC Irvine have developed an efficient algorithm for generating computer-rendered textiles with fiber-level details and easy editability. This technology greatly enhances the richness of virtual fabric models and has the potential to impact various industries such as online retail, textile design, videogames, and animated movies.

Suggested uses

·Virtual cloth design, rendering, and editing

Features/Benefits

·Enhance the richness of computer-generated entertainment media such as videogames and animated movies

·Add fiber-level detail to currently available yarn-based cloth designed produced by textile software and simulation tools

·Orders of magnitude storage savings

·Easy model editing

Technology Description

Fabrics are essential in our daily lives and carry rich amounts of unique information. The virtual design and modeling of fabrics are important for many applications such as online retail, textile design, and entertainment applications like animated movies. Despite their widespread presence in computer-generated graphics, their structural and optically complex nature are difficult to accurately model. State-of-the-art modeling of detailed fabric structures can be achieved through volume imaging (e.g., computed microtomography), but these models are difficult to manipulate once generated. Another state-of-the-art modeling approach is yarn-based modeling, which is more amenable to editing and simulation but does not capture richly detailed fiber-level structures needed to attain realism. Combining the pros of realistic experience with the pros easy modeling (via editability) is critical for the adoption of richly detailed fabric models.

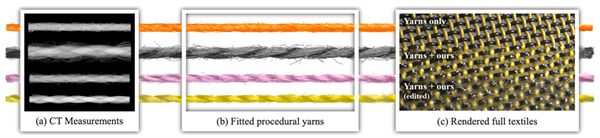

An inventor at UC Irvine has developed an automated modeling process that takes micron-scale fiber geometry of cloth yarns measured with computed tomography and outputs highly compact virtual renderings of these fabrics (see image). Using a variety of starting materials (cotton, rayon, silk, and polyester), they show that their algorithm can populate fiber-level structure in yarn models while maintaining editability and ease of use. Their fitting algorithm is fast, taking less than ten CPU core minutes to fit each yarn. The models have been validated by comparing full model renderings with photographs of each respective yarn, measuring parameters such as twisting, cross sections, and fiber numbers. This approach does not rely on replicating small pieces of fabric to generate long yarns and therefore yields highly realistic and non-repetitive textiles. The generation of full textiles is achieved with significant space-saving capabilities as only twenty-two numbers need to be stored for each type of yarn. This technology combines the best of the aforementioned volume imaging and yarn-based modeling techniques while avoiding each of their weaknesses.

State Of Development

Studies conducted and published

Related Materials

- Image rendering utilizing procedural yarn model generated in multi-stage processing pipeline - 09/10/2019

- Fitting procedural yarn models for realistic cloth rendering - 07/11/2016

Patent Status

| Country | Type | Number | Dated | Case |

| United States Of America | Issued Patent | 10,410,380 | 09/10/2019 | 2017-194 |

Contact

- Edward Hsieh

- hsiehe5@uci.edu

- tel: View Phone Number.

Inventors

- Zhao, Shuang